Graphics: Nothing to PBR

I am someone who is fascinated by graphics programming, the creation of game engines and all things low-level. Here I’ve taken the time to create a proper graphics pipeline in order to create a PBR rendering system. I’ll likely reuse the shaders and model loading classes in future projects but for my next personal graphics project I’m tempted to pick up some Vulkan and make greater use of namespaces and more modern general C++ programming paradigms to make something more robust to build on top of.

What steps did I take.

I’m writing what I did up a little while after the fact, but he major steps I took were:

- Setting up CMake/SDL/Opengl Boilerplate.

- Implementing Camera Movement

- Loading in models and textures

- Implementing PBR (Physically Based Rendering)

- Implementing Normal Maps.

Much of what I implemented here was made possible by LearnOpenGL.com and 3Blue1Brown’s series on Linear Algebra, as well as my time as an undergrad at falmouth.

Setting up SDL/Opengl Boilerplate.

So back in the second year of my undergrad in computing for games I had worked with SDL and OpenGL to render some terrain in C++. The only problem being is that in my time since then I’ve come to hate my reliance on Visual Studio and undergrad levels of programming style at the time.

This pushed me to finally get some C++ build system knowledge and go through the initial stages of LearnOpenGL’s tutorial using the libraries I preferred. At the time I wanted to keep to one library ecosystem and using SDL-image with SDL made more sense than using a separate header library stb_image with glfw3. Before long I had a simple textured square.

Implementing Camera Movement

Following on from rendering a textured square I implemented an MVP stack. While I was at it I created objects to contain data to be rendered that also stored transforms. In this project practically all object transformations are stored as Matrices. I understand that in the long term this isn’t ideal but it’s good enough for a simple renderer.

Loading in models and textures

With the ability to look around objects I moved on to using Assimp to load in models using LearnOpenGL’s tutorial. Loading in Textures with the SDL2-image library.

I ran into some weird issues along the way when it came to getting the image textures to work correctly, but I figured it out. I believe the textures initially weren’t loaded into memory, then it was being loaded in upside down, before like Goldilocks, I got it just right.

I did go as far as implementing some simple diffuse lighting before deciding to just flat out and jump to PBR. And that gave some pretty good results. In hind sight I should have switch to working on other aspects of the project like giving it some a purpose other than rendering models. As this may have helped it keep my interest in the longer term.

Implementing PBR (Physically Based Rendering)

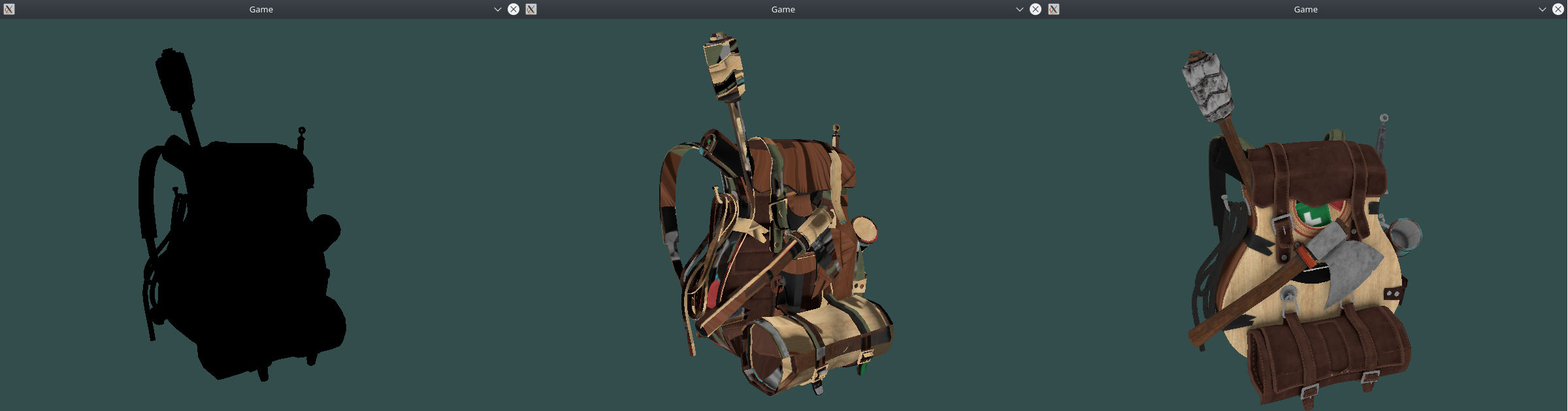

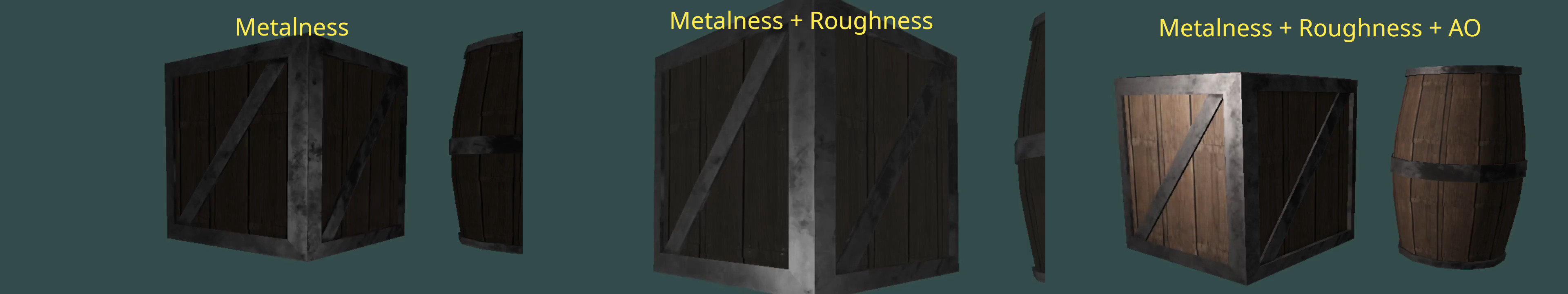

I then went through the steps to implement PBR from LearnOpenGL’s articles. I didn’t get at far as using textures for IBL or diffuse irradience, really I just wanted to focus on getting the lighting in. And I did it by implementing metalness, roughness, and AO in order. Other combinations of PBR layers exist but this was the one I chose.

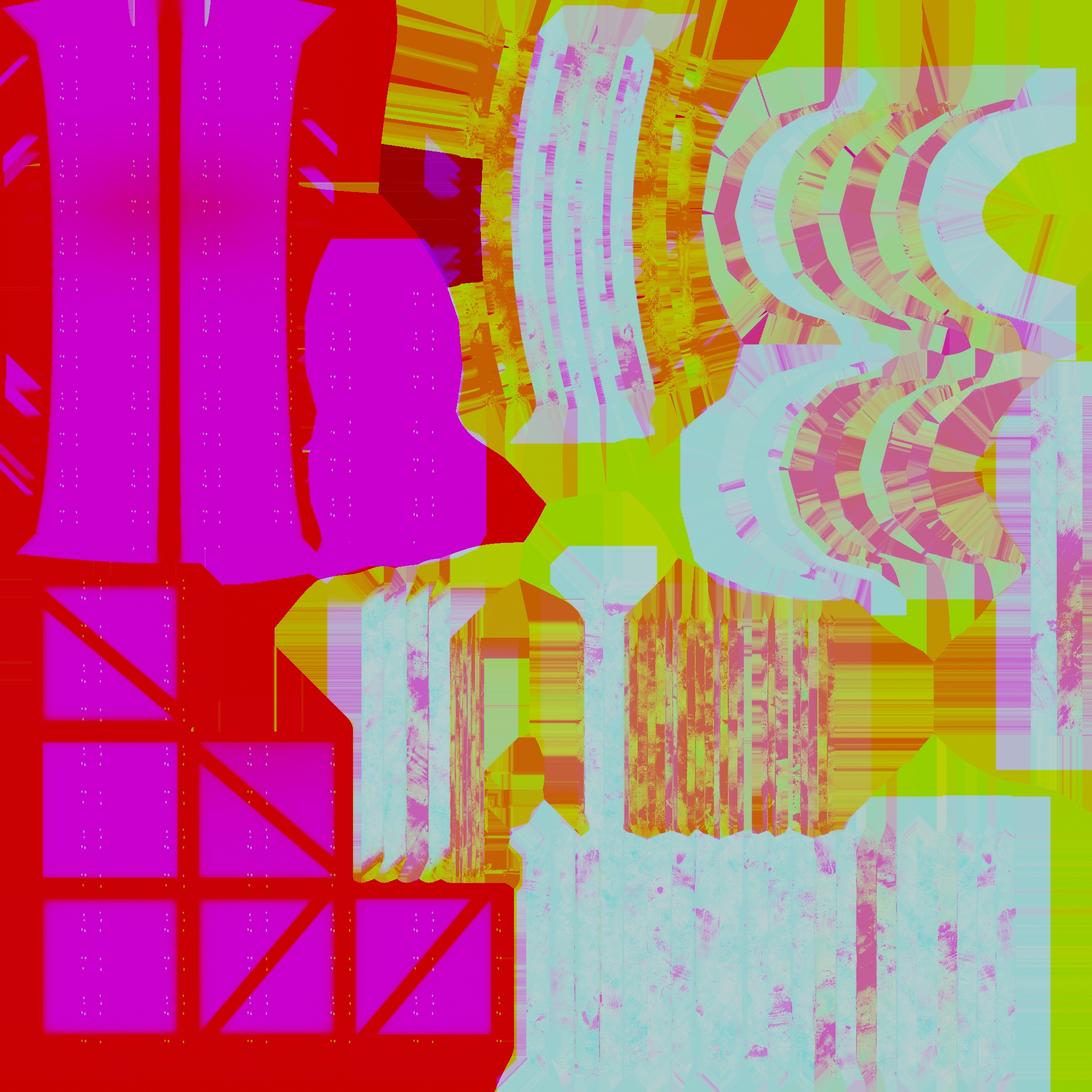

I was also interested in texture packing so although it wasn’t strictly necessary I got it rendering after packing each layer into the RGB values of a single texture (RMA aka. Roughness, Metallness, AO). This combined with some blender fu, allowed me to load in a single texture instead of three for PBR:

This gave me this result:

Implementing Normal Maps.

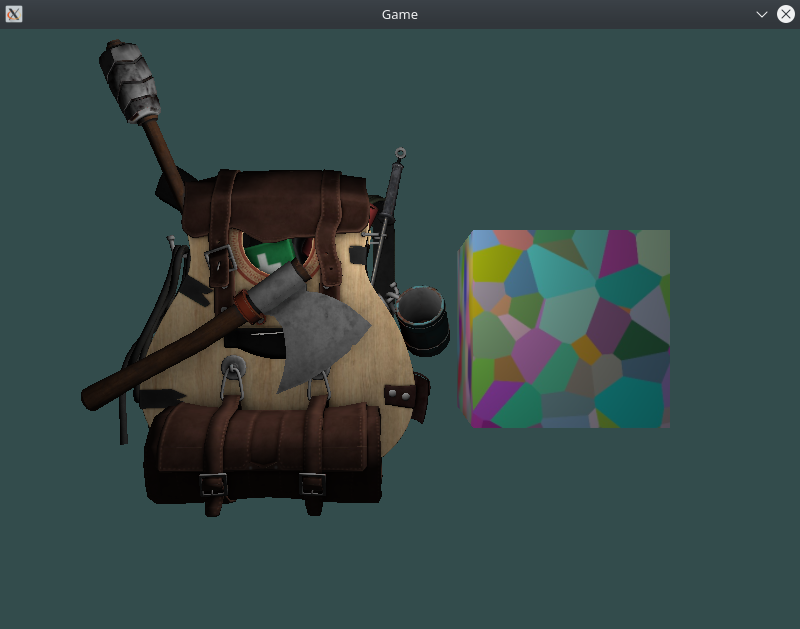

The last step I took was to add normal maps as I felt that PBR alone was pretty flat. But I felt that so far I was following LearnOpenGL’s articles too closely and not necessarily gaining a strong enough understanding of what was going on in the technique.

To counter this, I decided to calculate the tangent space of the normals at run time inside the GPU using the Geometry shader step, rather than caching tangents in another buffer like in LearnOpenGL’s implementation. Doing while and referring to resources like 3Blue1Brown’s series on Linear Algebra, Gave me a much needed deeper under standing of linear algebra and how transforms actually work.

This gave me the last update of this project: